Hongyin Luo 罗鸿胤

Working on AI reasoning and efficiency.

32-G440

32 Vassar St.,

Cambridge, MA 02139

Hongyin is a research scientist at MIT CSAIL, working with Dr. Jim Glass in the spoken language systems (SLS) group. He completed his Ph.D. at MIT EECS in May 2022, working on self-training for natural language processing.

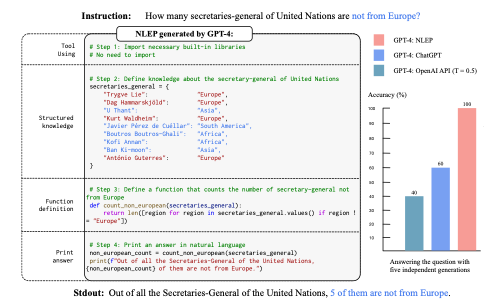

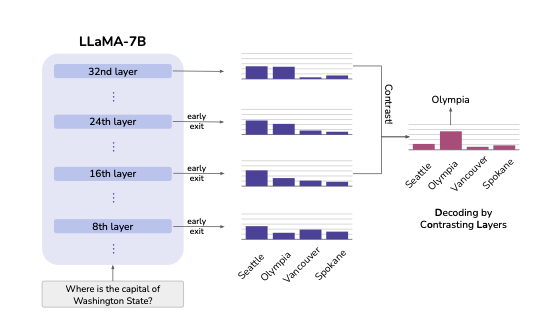

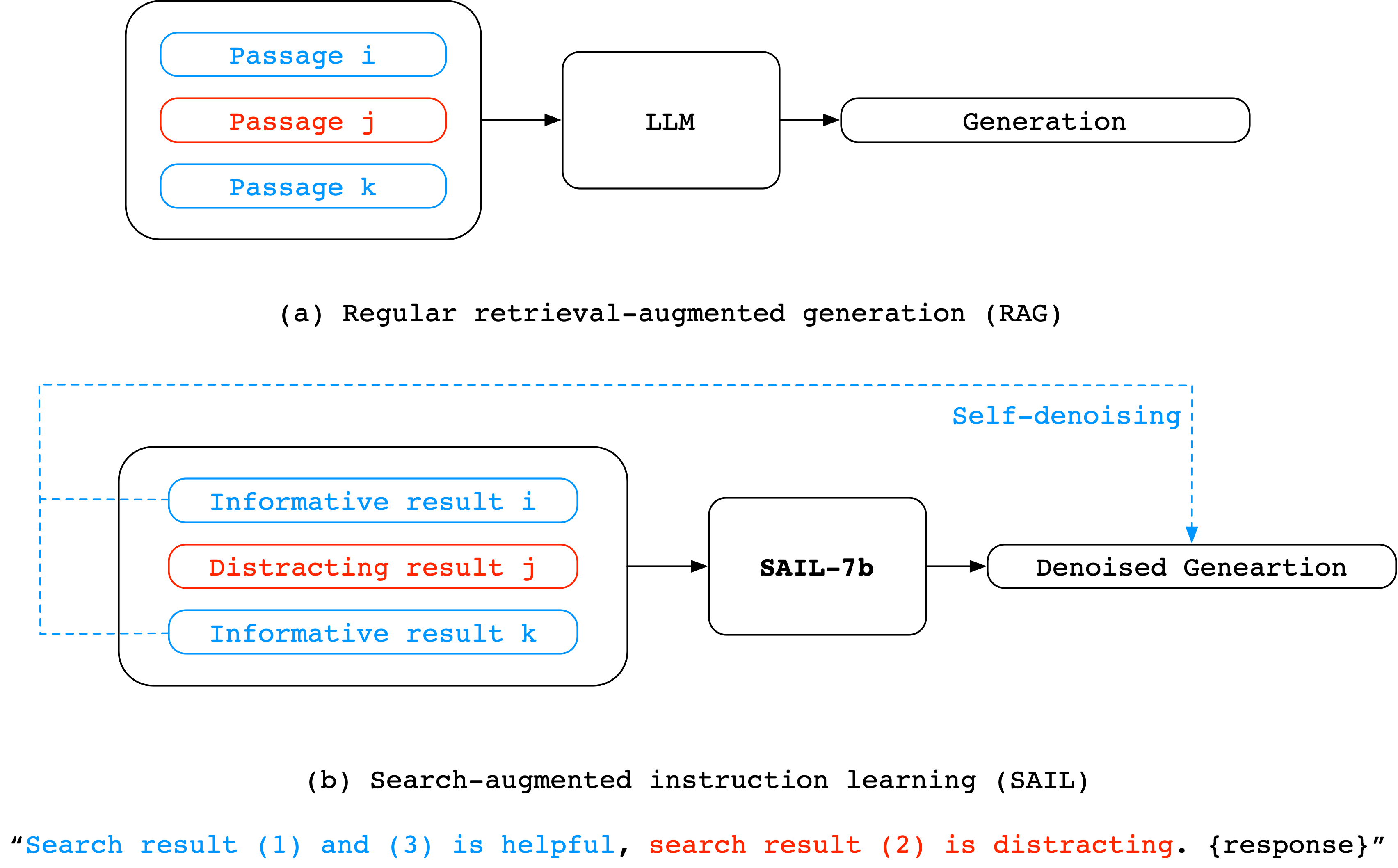

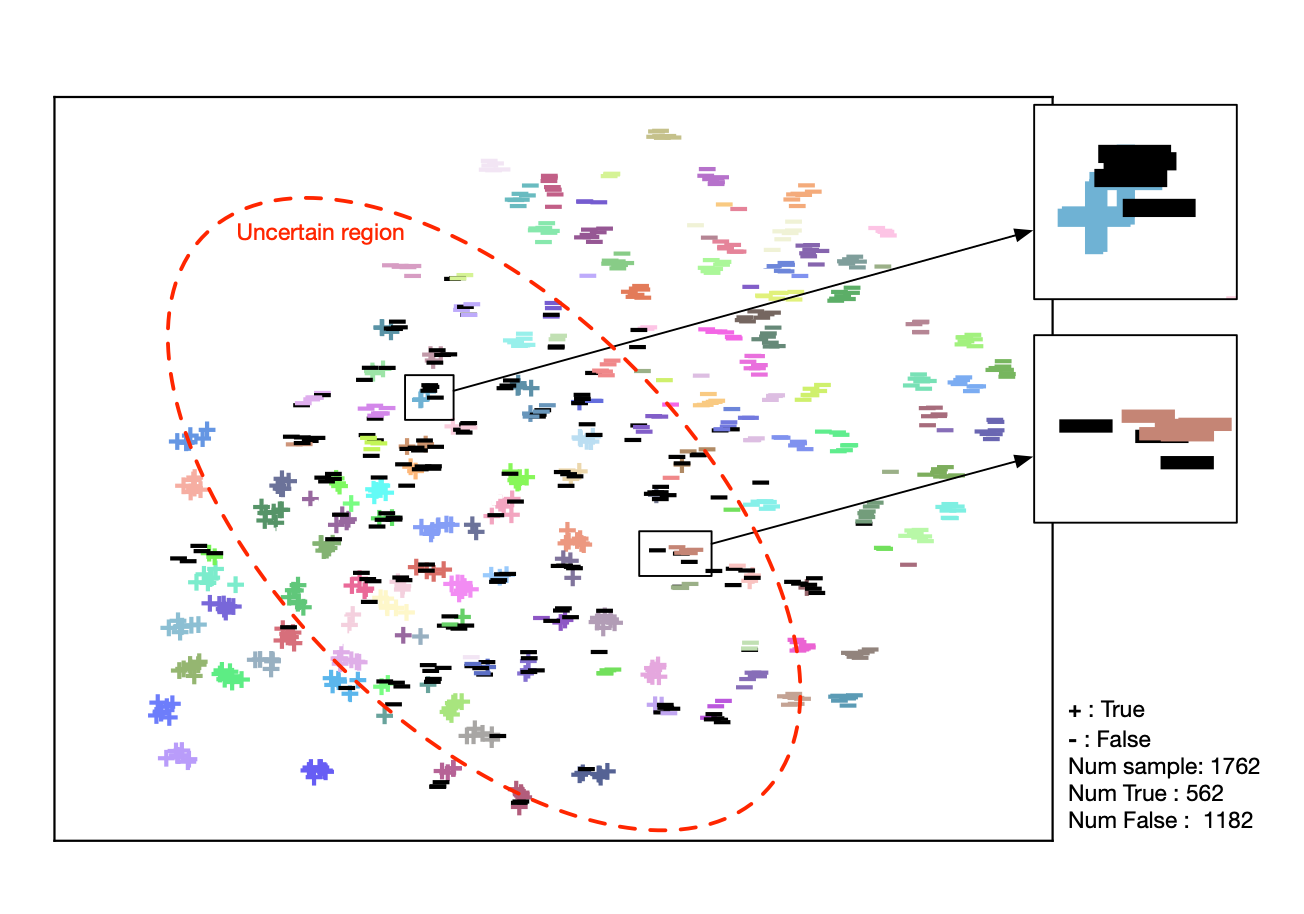

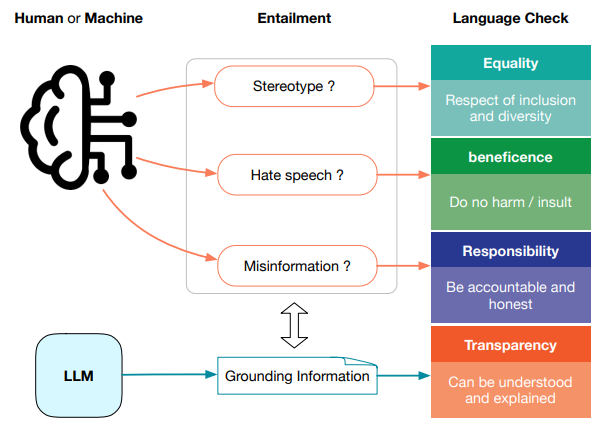

His research focuses on improving the efficiency, transparency, and reasoning ability of language models. His latest research has combined natural language with different formal reasoning engines, including entailment models and program interpreters. He has built small language models outperforming GPT3-175B with 1/500 computation, self-denoising language models that handles noises of search engines, and natural language embedded programs that achieves accurate reasoning without task-specific examples.

Email: hyluo [at] mit [dot] edu